How to build cybersecurity resilience: understanding the fundamentals that deliver success

Master the fundamentals of data-driven resilience, so your team can turn complexity into clarity and build commercially sound recovery strategies

Resilience is a topic of crucial importance for businesses today. The need to operate within an intricate and interconnected digital landscape, coupled with relentless regulatory pressures (DORA and NIS2), has created a situation where simple, playbook-based recovery plans are no longer enough. Businesses need to withstand adverse events in an elastic manner. Response capabilities and resources must be carefully planned and deployed in a manner that leverages the least amount of resources while yielding maximum recovery results.

NIST SP800-160, a guide on incorporating security and resiliency into IT systems, doubles down on this view. Its definition of resilience reads as “the ability to anticipate, withstand, recover and adapt to adverse conditions, stresses, attacks, or compromises on systems that use or are enabled by cyber resources”. This definition emphasises a holistic view of resilience, encompassing all stages of the disruption lifecycle and highlighting the importance of proactive measures informed by careful analysis.

However, the reality on the ground is different: in some situations, resilience programs are developed in highly dynamic environments where IT systems constantly expand and contract to adapt to business needs, making it difficult to understand where to rapidly deploy resilience investments. Often however, resilience programs are developed in complex, slow moving IT environments maintained by teams that have a siloed and disjointed understanding of what systems matter the most.

Meet resimate, your Resilience Intelligence Engine that transforms data chaos into crystal-clear priorities, without spreadsheets or guesswork. Identify what matters to the business, measure your preparedness and execute smart, ROI-backed moves that will make your resilience team successful. Click the button below and see it in action today!

Both situations make it difficult to identify critical resilience gaps and prioritise investment based on actual business impact. Too often, decisions are driven by perceived urgency ("whoever screams the loudest") rather than objective data and risk assessments.

The good news is that most companies can break the impasse with data-driven resilience. Data-driven resilience is the strategic use of data to anticipate, withstand, adapt and evolve in the face of disruptions. Within data-driven resilience, understanding interdependencies between IT infrastructure and business processes is key. By leveraging real-time infrastructure data, organisations can shift from reactive recovery planning to proactive deployment of resilience investments, ensuring agility in the face of IT changes and enabling informed decisions before, during and after a crisis.

Measurable objectives are more effective than compliance-driven goals in building true resilience. Theoretical plans are insufficient: only actionable resilience strategies work in practice, but they require a deep understanding of dependencies and potential impacts. In this post, we review some of the fundamentals of data-driven resilience that lean security teams can adopt today.

Fundamental I: using data to show return on investment

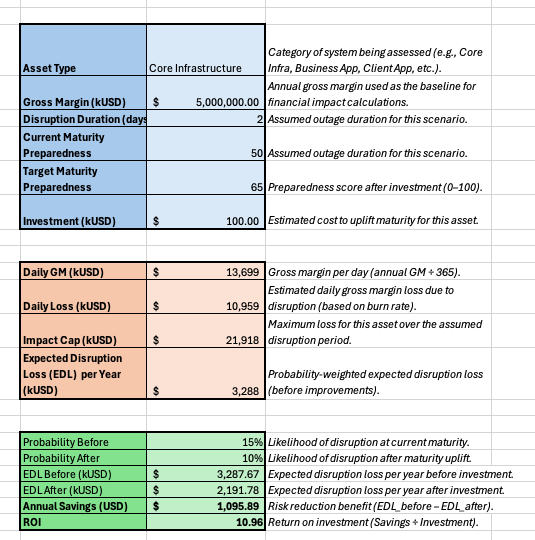

Data-driven insights are essential for demonstrating return on investment (ROI) on Business Continuity Planning (BCP) investments. They are crucial in moving teams away from “gut feeling” planning and shifting towards using quantifiable evidence. Instead of relying on assumptions about potential disruptions and their impact, data allows organisations to prioritise investments based on actual risk exposure and potential financial losses.

There are four sources that help teams gain the right data insights:

- Infrastructure extracts detailing system configurations

- Dependency mappings illustrating interconnections between IT systems and their underlying infrastructure

- Business process mappings outlining critical workflows to the relevant IT systems

- Supplier data highlighting where and how IT assets rely on external providers

The next step is to comprehensively map out the resilience dependencies within an organisation. Teams must connect key business processes to the applications and data they use. These applications are then linked to the IT services and suppliers that support them, which depend on software and infrastructure such as hosting and networks. Mapping these layers provides a clear understanding of how disruptions at the infrastructure level can cascade upwards, impacting critical business functions.

The amount of data may feel overwhelming, but even partial coverage gives security teams better insights than before. And compared to the effort once required from product owners and stakeholders, modern data analytics solutions are far less costly.

Once critical resilience dependencies are identified, teams can assemble a data-driven model for resilience spending focused on assets with high business impact and low preparedness. In this context, Return On Investment (ROI) calculations should consider the cost of implementing technical controls versus the quantified losses from disruption events. By comparing the costs of these controls against the protected bottom-line, teams can demonstrate the financial benefits of resilience investments.

Once ROI can be easily demonstrated, the next step is prioritising resilience efforts. This can be done by mapping assets on an impact and preparedness quadrant. This matrix must help identify "crown jewel" assets that have a high disruption impact but suffer from a low readiness state and that must be prioritised for immediate attention, with mathematical models used to quantify the risk and justify the allocation of resources to improve their resilience.

With a solid ROI methodology in place, teams can then get to work by translating technical infrastructure into a resilience priority list. At the simplest level, this can be done by simply force-ranking of basic preparedness strategies. For example, teams should start with a criticality analysis to identify the most important systems, followed by backup onboarding to ensure data protection, then contact list creation for effective communication, then continuity planning to define recovery procedures and finally testing to validate the effectiveness of recovery plans. Sequencing matters because each step builds upon the previous one, creating a compounding resilience strategy.

Fundamental II: assembling the right team

While using a data-driven strategy will help you allocate resilience investments, it won’t suffice for executing and testing your resilience strategy. Assembling the right team is crucial. Successful resilience programs require bridging the gaps between business units, security teams and IT infrastructure teams to ensure clarity of execution and the necessary resource elasticity before, during and after a crisis.

A unified approach ensures that all aspects of the business are considered when assessing risks and developing mitigation strategies. Siloed teams often have conflicting priorities and lack a holistic view of the organisation's resilience capabilities, leading to drastically slower recovery times. A shared understanding of dependencies and “who owns what” is key to unlocking resilience preparedness.

At a high level, you must assemble a resilience team. Think about including stakeholders from Risk Management, Business Continuity Management (BCM) or Business Operations, IT Disaster Recovery (IT DR), Cyber Defence, Crisis Management and Infrastructure Engineering. Each company has its own unique structure, but you cannot go wrong by picking from the above departments.

Aim to assemble a diverse team. Resilience must be a cross-functional effort because each stakeholder brings unique expertise and perspectives to the problem of building resilience. Risk Management and Cyber Defence can help identify technological and non-technological threats to business continuity. BCM can help develop the actual business continuity plan, IT DR/Infrastructure Engineering can be tasked with testing and execution, while Crisis Management can help establish an overall coordination layer.

Meet resimate, your Resilience Intelligence Engine that transforms data chaos into crystal-clear priorities, without spreadsheets or guesswork. Identify what matters to the business, measure your preparedness and execute smart, ROI-backed moves that will make your resilience team successful. Click the button below and see it in action today!

The key to building resilience is ensuring that individual business stakeholders take ownership of planning and execution within their areas. This approach embeds accountability across the organisation, preventing resilience from being seen as the responsibility of a single department and encouraging broader engagement and support. Effective resilience execution requires clearly defined roles and responsibilities.

Although building the right team may take time, the work should begin immediately and progress incrementally. Key deliverables must, at a minimum, include business impact assessments, critical asset identification, dependency mapping, control definition, plan testing and continuous oversight. This capability must be underpinned by a strong incident response process, trained to manage disruptions through an incident commander model.

Finally, barring the absence of specialist resilience coordination and planning tools, the resilience team lead can be supported by a resilience committee, comprising, at a minimum, the Chief Information Security Officer (CISO), Chief Operating Officer (COO), IT platform and operation leads who periodically review the resilience priority list, approve investments and oversee testing. This structure ensures that resilience efforts are aligned with business objectives and adequately resourced.

Fundamental III: building recovery based on facts

The third fundamental is developing recovery plans based on data and facts. This is done by first mapping actual dependencies across systems and services. The key objective here is producing an overview that consolidates inputs from infrastructure, business processes, suppliers and applications. Ideally, your team should build a data pipeline that consolidates all of these various inputs. It is crucial that this pipeline collects up-to-date data based on the currently deployed production infrastructure.

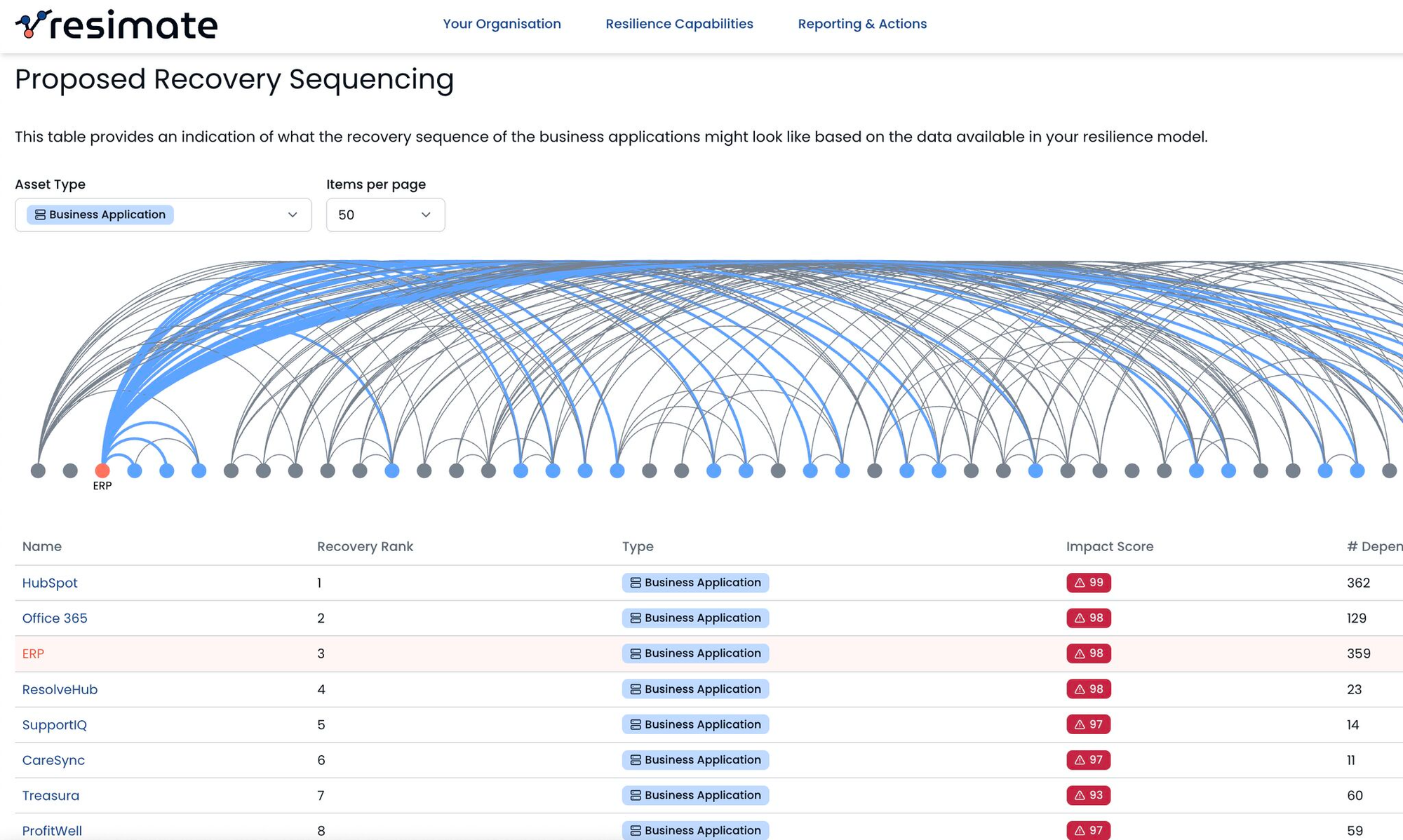

The next key step is putting this data into a knowledge graph to visualise relationships between dependencies. This visual representation enables a deeper understanding of how different components interact and rely on each other, facilitating dependency-aware risk management and planning.

When developing recovery sequences, focus on identifying critical assets and potential choke points that could create cascading failures. To achieve this, teams must calculate the blast radius against key business processes provoked by cascading failures in technology dependencies. This involves tracing the impact of a failure in one component across the entire system. The golden objective here is to unearth every single point of failure that would lead to unexpected operational disruptions. Understanding the full extent of cascading failures allows for targeted mitigation strategies and prioritisation of resources.

With all this data in your hands, your team resilience team can build prioritised recovery plans based on actual business impact, not internal politics. Additionally, recovery sequences, while mostly being derived on logical dependencies and impact, can also be enhanced or prioritised with additional factors like regulatory relevance and customer facing services. This ensures that the most critical functions are restored first, minimising disruption and financial losses.

The final step is to leverage data-driven simulations to validate recovery strategies. Dependency maps must be used to formulate recovery hypotheses, run tabletop exercises, technical drills and cross-functional crisis simulations, from quick walkthroughs to multi-day end-to-end exercises. These simulations should expose weaknesses in recovery plans and identify areas for improvement, ensuring that the organisation is prepared to respond effectively, not wishfully, to disruptions.

Conclusion

Resilient organisations understand their IT infrastructure, how it supports the business and are able to anticipate potential disruptions. When incidents occur, they’ve put in the planning to recover as quickly as possible. Achieving this level of preparedness and readiness requires a shift from a compliance-driven to a data-driven resilience approach.

Data-driven resilience uses data analytics and insights to inform resilience strategies and decision-making. It involves collecting data from various sources, including IT systems, business processes and threat intelligence feeds. This data is then analysed to identify vulnerabilities, assess risks and prioritise mitigation efforts.

By embracing data-driven resilience, organisations can move beyond simply meeting compliance requirements and build a “ready to go” posture. When shifting to a data-driven resilience approach, stick to the simple fundamentals of investing based on tangible ROI, assembling the right team and using facts to drive your planning. The rest should fall easily into place.

Platforms like resimate, by using the fundamentals described in this article, can help organisations accelerate their shift to data-driven resilience programs that anticipate disruptions, minimise downtime and maximise recovery performance.